Patch-VQ: ‘Patching Up’ the Video Quality Problem

For image quality assesment, check out PaQ-2-PiQ.

Please email yingzhenqiang at gmail dot com for any questions. Thank you!

Please email yingzhenqiang at gmail dot com for any questions. Thank you!

ABSTRACT

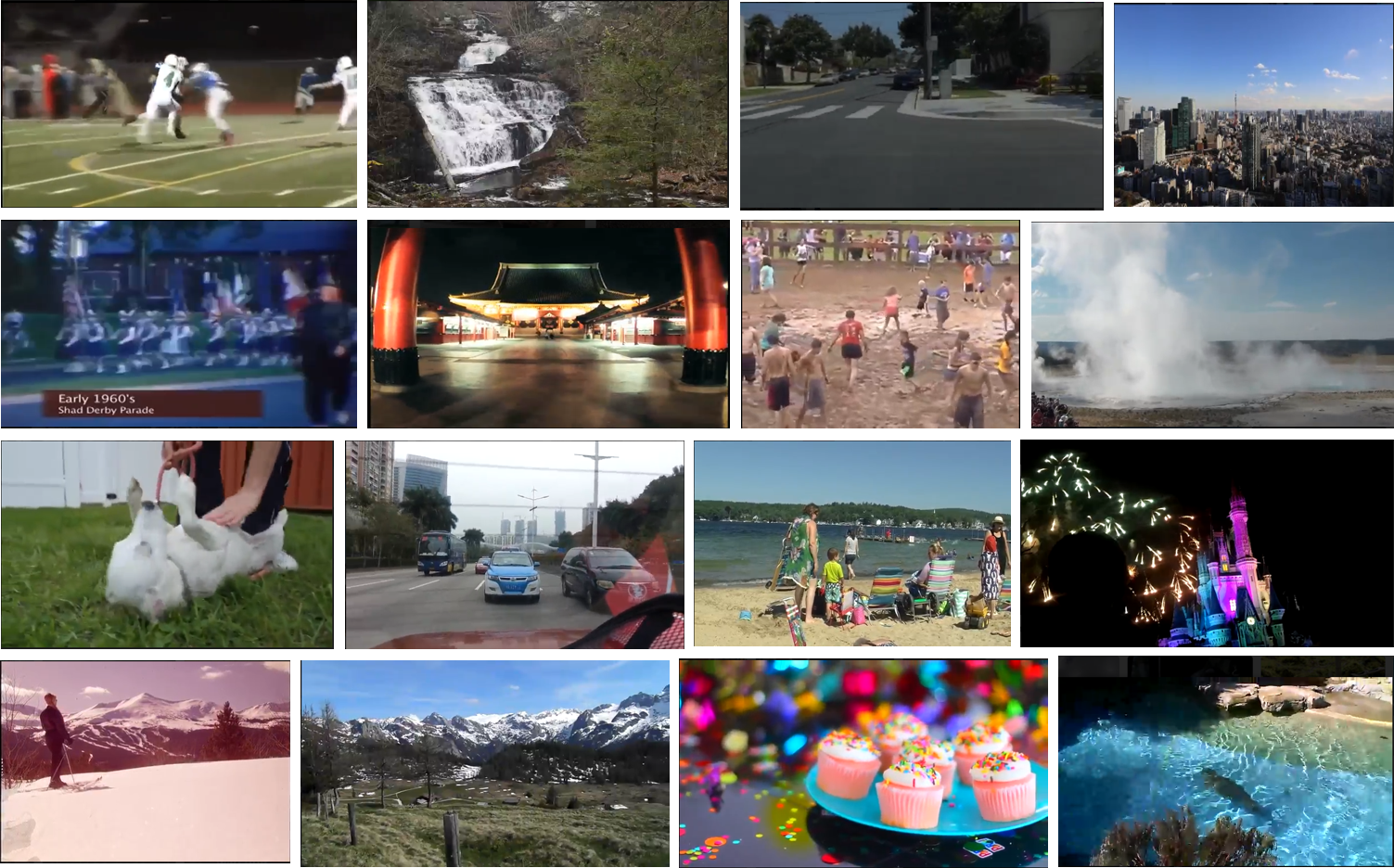

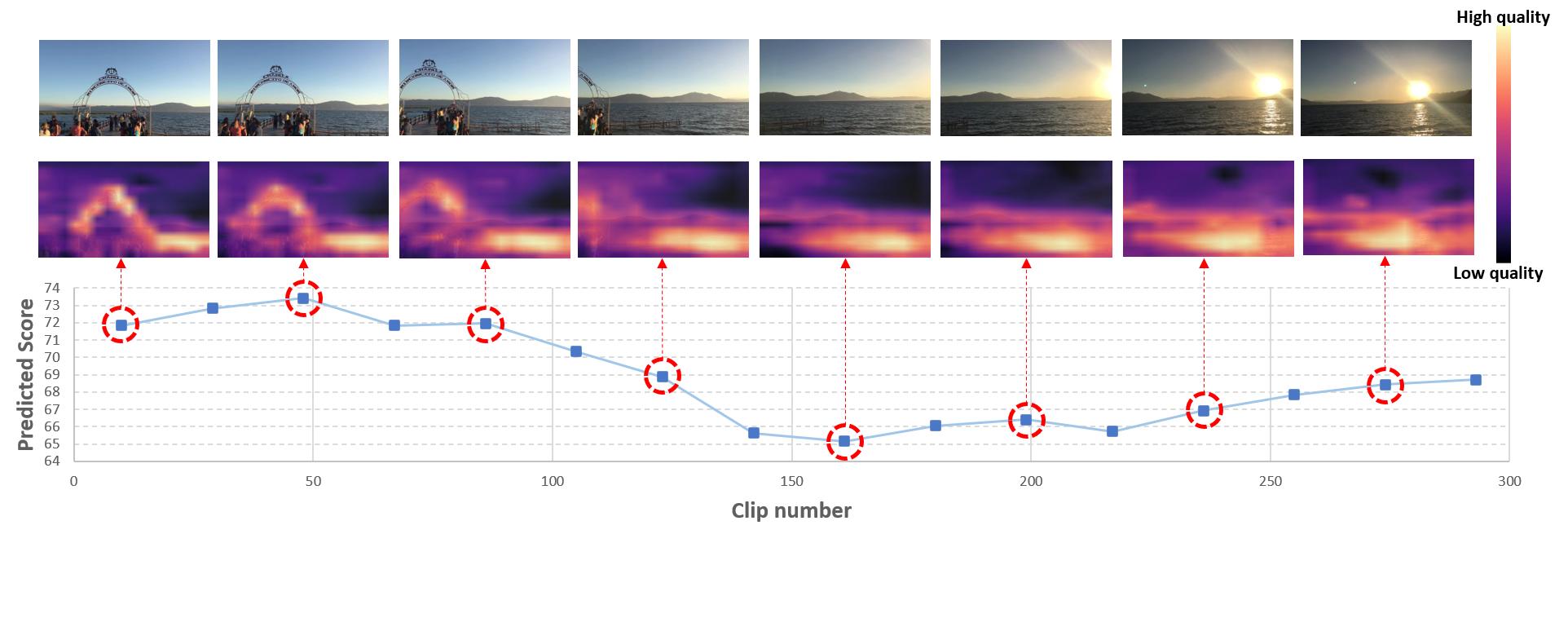

No-reference (NR) perceptual video quality assessment (VQA) is a complex, unsolved, and important problem to social and streaming media applications. Efficient and accurate video quality predictors are needed to monitor and guide the processing of billions of shared, often imperfect, user-generated content (UGC). Unfortunately, current NR models are limited in their prediction capabilities on real-world, "in-the-wild" UGC video data. To advance progress on this problem, we created the largest (by far) subjective video quality dataset, containing 39, 000 real-world distorted videos and 117, 000 space-time localized video patches ("v-patches"), and 5.5M human perceptual quality annotations. Using this, we created two unique NR-VQA models: (a) a local-to-global region-based NR VQA architecture (called PVQ) that learns to predict global video quality and achieves state-of-the-art performance on 3 UGC datasets, and (b) a first-of-a-kind space-time video quality mapping engine (called PVQ Mapper) that helps localize and visualize perceptual distortions in space and time.

EXAMPLES

Generated Space-time Quality Maps

LINKS

References

- Zhenqiang Ying, Maniratnam Mandal, Deepti Ghadiyaram, Alan Bovik. Patch-VQ: 'Patching Up' the Video Quality Problem, In CVPR 2021

- Zhenqiang Ying, Haoran Niu, Praful Gupta, Dhruv Mahajan, Deepti Ghadiyaram, Alan Bovik. From Patches to Pictures (PaQ-2-PiQ): Mapping the Perceptual Space of Picture Quality, In CVPR 2020

- Vlad Hosu, Franz Hahn, Mohsen Jenadeleh, Hanhe Lin, Hui Men, Tamás Szirányi, Shujun Li, and Dietmar Saupe. The Konstanz natural video database(KoNViD-1k). In 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX)

- Zeina Sinno and Alan C. Bovik. Large-scale study of percep-tual video quality. IEEE Transactions on Image Processing, vol. 28, no. 2, pp. 612-627, Feb. 2019.